Grazia Italy: Jefferson Hack by Tom Dixon

Originally featured in Grazia Italy

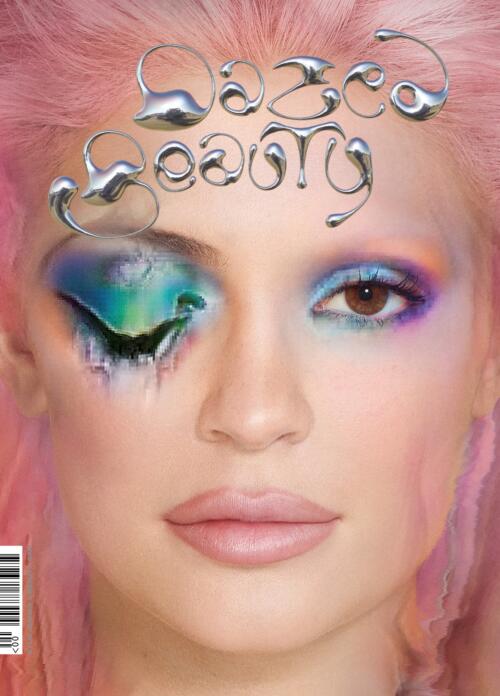

I have always been an admirer of Jefferson Hack. Hack is a publisher, creative director, author, curator, and prophet-in-general of the contemporary fashion cultural tide. As co-founder and CEO of Dazed Media—comprising fashion publications Dazed & Confused, AnOther, Dazed Beauty and online video platform NOWNESS—he’s demonstrated a unique entrepreneurial attitude, a refusal to stand still, and the ability to be elastic in a constantly changing media landscape. He’s continually looking to the future, which is why he is such an important voice to include in this issue. From his AI generated book cover for his 2016 book We Can’t Do This Alone: Jefferson Hack The System, to the much talked about inaugural cover of Dazed Beauty in which Kylie Jenner’s face gets distorted by AI, he has never been afraid to harness and experiment with the latest innovations in technology – always recognizable by his fearless creative direction. I sat down with Jefferson to hear about his forays into the world of AI, who inspires him in the field, and what he thinks it means for both the creative industries and humanity at large.

I want to hear a bit more about your experience with AI and when you first encountered it, because you are definitely an early adopter in your field. When and how did you first engage with the AI machine?

I was very inspired by Brian Eno’s work in generative music – the idea that music could be always different and ever-changing. It was kind of an early form of training data sets using language models and algorithms, and it was about randomization and unpredictability, rather than predictability. It was the beginning of thinking about machines creating content beyond just what was beyond human. That was an early inspiration. In 2016 when we published the book [We Can’t Do This Alone: Jefferson Hack the System] I wanted each cover to be individual and random—to find a printer that could print each cover individually ‘oh it’s impossible’ they said ‘there’s no such technology’. I was doing some work with Kodak at the time and they had these high-speed inkjet printers and myself and a really smart graphic designer called Ferdinando Verderi worked with their creative technologists to kind of hack the printer. Their tech team wrote a generative algorithm and the AI was trained on the contents of the book—it was this early idea of the machine making its own design. Some of the covers were absolutely beautiful and some of them were completely ugly. It was a really amazing and fun project but it also created an aesthetic in and of itself that’s been quite copied by lots of other book designers and album cover designers and stuff.

Tell me about the Dazed Beauty cover, how did it come about?

At the time (2018), large language AI models were only really being used in labs under very specific conditions. When we launched Dazed Beauty as a platform, we did an inaugural print issue with an amazing creative director called Isamaya Ffrench and this incredible photographer Daniel Sannwald shot Kylie Jenner, and the idea was that the hair and makeup looks would be created by an AI. We worked with a company in Berlin called BeautyGAN, who had a language model that scraped Instagram for imagery looking for a kind of conventional beauty, so it was training on hair and makeup trends.

Daniel fed the images in and then it outputted the hair and beauty look. It was amazing, it melted half of her face, her eye was completely melted. That was a really interesting experiment. And I think that actually some of the most interesting AI artists are working in this very collaborative way with the technology—rather than just prompting and seeing what comes out, they’re finding ways to intelligently incorporate what AI can do, both as a critique of it, but also a critique of internet culture. And 2018 was kind of an important year, a lot of really interesting stuff happened. And one of those was the DeepMind AlphaGo moment [AlphaGo is an AI system which mastered, and won the board game Go]. That was a realisation of “Oh, AI can be very creative in and of itself”.

Did that magazine cover actually succeed compared to the more analogue ones? Were you able to measure it?

With something like that we didn’t really have control, and the AI made something that was totally uncommercial. I mean, it melted half of her face, it was the most un-commercial magazine cover you could ever put out. But that was a huge success for us because it was so different and it was so punk in a way.

How did she feel about it?

She ended up buying the prints and hanging them in her office, yet it went against every form of conventional thinking. And that’s really the point, you know, conventional thinking leads to conceptual blindness. And there are never any breakthroughs from conventional thinking or, you know, focus groups or research polls. Unfortunately, we’re very wowed by chatGPT; watching something write in real time the answers to something. But it hallucinates, it makes tons of mistakes. It’s never going to be very creative.

There’s points of banality as well. I tried writing the questions for you using it and it came out with the most banal shite that you could ever think of.

I think more is not going to drive more value. We’ve just come out of a digital media hype cycle which was all about chasing eyeballs—the Vice magazine concept of scale—and it didn’t work, it was all built on a house of cards. And unfortunately that’s exactly what’s going to happen with a whole load of AI generated content and AI artists and AI designers; there’ll just be a whole load of crap and it won’t mean anything. The area that I’m more interested in is the handful of artists and designers right now who are actually able to do anything interesting with it. I think the problem with the narrative around AI is that it always becomes really binary; it’s the end of the world, the machines will take over, or it’s going to save us and it’s going to be the cure for cancer and climate change and all the ills of the world. AI is always given this big role, and I think ultimately it’s humans who will decide how AI is used. We really don’t need to worry about AI, we need to worry about the people who are using AI, and whether they’re going to use it in benign or destructive ways. There’s a Marshall McLuhan quote about how artists are really the kind of early warning radar systems for culture, and how society needs to look at artists to show us what those early warning signs are. So I’m interested in these artists because they speak about the good and the bad things that AI can inform, and be used to help navigate the future.

So who would you point to in your world as really understanding and producing original work then?

I would say that Refik Anadol is really interesting, his work at MoMA called Unsupervised when he trained the large language model on 200 years of the MoMA history, that was really great. I think Hito Steyerel’s done some really great AI work looking at the future, the link between AI and capitalism, AI and power. She uses AI tools to generate beautiful images of flowers, so it’s kind of like an artificial garden, but at the same time, it talks about how nature is also manipulated by society, and so it plays that duality. Mat Dryhurst and Holly Herndon have a big show at Serpentine this year, and they created a kind of an AI baby called Spawn, which was making music using AI generative tools, and Holly+, a project where Holly created what she calls a deepfake of herself. It’s uncanny and accurate, but there’s this aspect to it that’s a bit alien. What Es Devlin did in AI with ‘Please Feed The Lions’ where she brought the lions in Trafalgar Square to life during London Design Week, I think that was an important breakthrough moment in design AI.

What about in fashion, is anybody actually standing out yet?

I just saw the Balenciaga Winter 2024 show and Demna worked with an architecture studio called Sub to make an entire AI generated environment. It was created using cityscapes from around the world; like New York melted into Tokyo and it was a direct comment on a culture of overstimulation. There’s some interesting image makers in the fashion space using AI tools too, like the photographer Charlie Engman. He partners with Hillary Taymour on a New York label called Collina Strada, and his way of using AI is super collaborative. It feels unique and different, it doesn’t feel like it’s just machine-generated, because there’s a look to all of that other stuff— it just looks kind of tacky and spacey—it belongs in a mall of the future in a Simpsons cartoon. But none of it is anything you want, right?

But that’s because with Charlie Engman he’s training his own unique model, right? What’s he training it with, do you know?

For different projects, different things, but it’s definitely a narrow field. And I think this is the key. I think the problem is with the Large Language Models that just arbitrarily scrape the web, which is a junkyard in itself of misinformation and bad content, it’s never going to produce something great. There’s even academic theories now about these tools leading to model collapse, as AI generates more content then future AIs will be learning from AIs rather than humans and that will lead to a collapse of the system and its uses. One of the areas at Dazed where we’ve been experimenting, where we’ve had some really interesting results are in things like transcription. The ability of AI to transcribe interviews like this one is unbelievable. Before that took days for people, and it’s grunt work. I think AI is really good at dealing with grunt work, where there’s not a lot of creativity needed. The other area, and this was a bit more mind blowing for me, was in audio. We’ve experimented with taking text and having AI read the text back so that you can have a listenable version of an article. And it can do voices, so we spent a long time working with the company to try and get a voice that we felt represented Dazed the brand. It’s a bit like looking at typefaces, you have to get the right nuance, the right pacing.

What did you end up with as an accent? London, slightly East? And was it male or female?

Yeah, East London, female voice, but with a bit of worldliness in it [giggling]. We’re still experimenting with the intonation, the fluidity of it. I couldn’t believe that it wasn’t a real person reading it. Which means that you can then suddenly transform a newspaper into a broadcast, and then play with it from a creative direction perspective.

Obviously you started off—like myself—analogue, and the digital medium has wiped out huge amounts of work for creatives. In the kind of digital versus analogue world of your publications, are you not concerned about the constant lowering of the quality of the artefact in a way? You like a piece of paper, don’t you? Something to hold?

Well, Dazed was a product of the technical revolution at the time because desktop publishing meant that you didn’t have to have a huge art department. When I started working in magazines you had ten people at least, cutting out each image, cutting out the type, pasting it up. It was a hugely labour intensive process. That entire department got wiped out. Jobs like that didn’t exist after Apple and desktop publishing tools came out. So we were kids of the desktop publishing revolution, or the Apple Mac revolution. Now you can make a magazine on an iPhone. So that wiped out a whole generation of artists that were doing a particular trade, but it also created a new generation of designers and artists that used these new tools. So, it’s about embracing change. Old jobs disappear, new jobs get created, old ways of communicating end, and new ways develop. I’m not worried about change. I think that being independent, coming from a place of disruption is quite a natural place for me. The more chaos there is, the more comfortable I feel in terms of my ability to navigate that as a publisher, creative, curator…whatever the hell it is that I am!